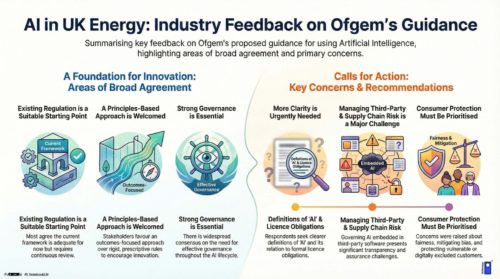

For those that missed it a summary of the responses from the OFGEM consultation on the use of AI in the engery sector.

Training, digital and transparency were all themes, but what was interesting was also the importance of third party and supply chain risk as use starts to expand. Governance and policy is going to start to be increasingly important to stay ahead in this area.

https://consult.ofgem.gov.uk/energy-technologies/ai-in-the-energy-sector-guidance-consultation/consultation/published_select_respondent

Key Take Aways

- Broad agreement with Ofgem’s principles-based, pro-innovation approach, but qualified this with requests for greater clarity, specificity, and more proactive risk management.

- A general view the existing regulatory framework as adequate for the current state of AI, but a need for continuous review to remain fit for purpose as AI evolves.

- Some disageement that the framework is already insufficient, citing limited transparency, systemic failure risks, and ongoing negative consumer impacts from poorly deployed technology.

- A clearer definition of “AI” is needed, distinguishing simple automation, traditional machine learning, and advanced systems such as Generative AI, to enable proportionate governance and risk assessment.

- Third-party and supply chain risk emerging as a primary concern, particularly where AI is embedded in widely used vendor solutions (e.g., Microsoft Copilot), limiting licence holders’ ability to audit and assure controls.

- Many respondents emphasising consumer protection, with particular focus on vulnerable and digitally excluded consumers and the need to maintain effective non-digital channels.

- “Digital abandonment” was highlighted as a risk – consumers avoided or not being able to complete journey’s digitally

- A risk of bias and discrimination from AI trained on historical data, including potential impacts on underserved groups and low-income households.

- Skills gaps and implementation costs were consistently maybe a barrier, spanning leadership AI literacy through to technical expertise.

Innovatative ideas

- Introduce an AI risk incident log to standardise capture, analysis, and escalation of AI-related issues.

- Tiered risk categorisation model with common AI use cases

- Using AI to proactively identify vulnerable customers

- Use AI-generated synthetic data to unlock valuable but sensitive datasets

- Establish an industry advisory panel to support organisations with key AI decisions and capability-building

Want to discuss further, contact us here

Generated from ROStrategy knowledge base

Want a question answered? Also let us know here

RO-AR insider newsletter

Receive notifications of new RO-AR content notifications: Also subscribe here - unsubscribe anytime